Alignment Gap: Why "Smart" Agents Fail in Production

Your agent can look great on paper yet still miss the mark—failing to close tickets, earn trust, or drive outcomes. Learn how Mentiora closes the gap by measuring what stakeholders care about and optimizing agents against those signals.

Technically, your AI agent is working. The latency is low. The hallucination rate on general facts is near zero. The monitoring dashboard is all green.

Yet, business stakeholders are frustrated. Support tickets are not closing. The sales team does not trust the bot with leads.

Why is there such a gap between "Technical Success" and "Business Value"?

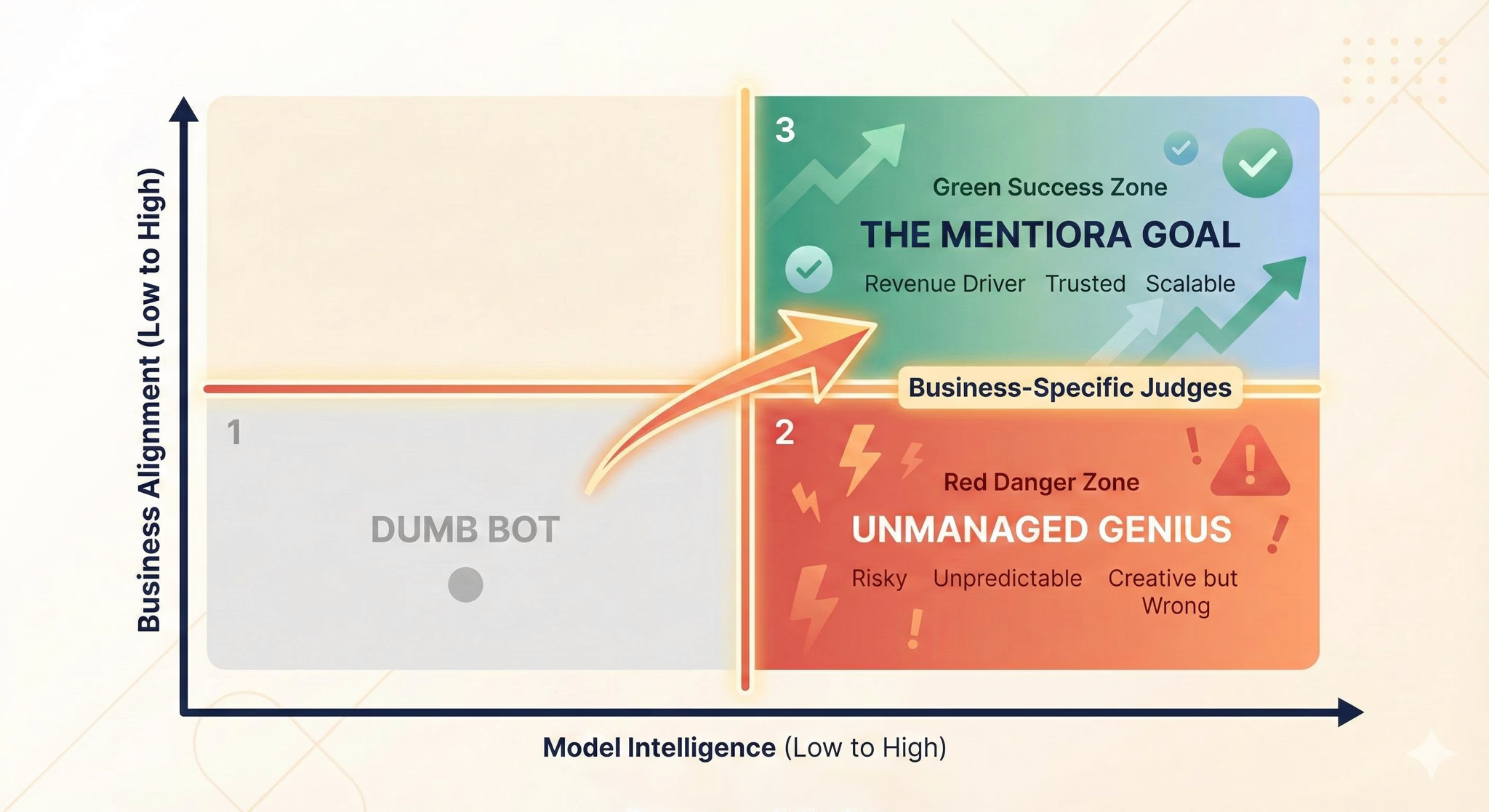

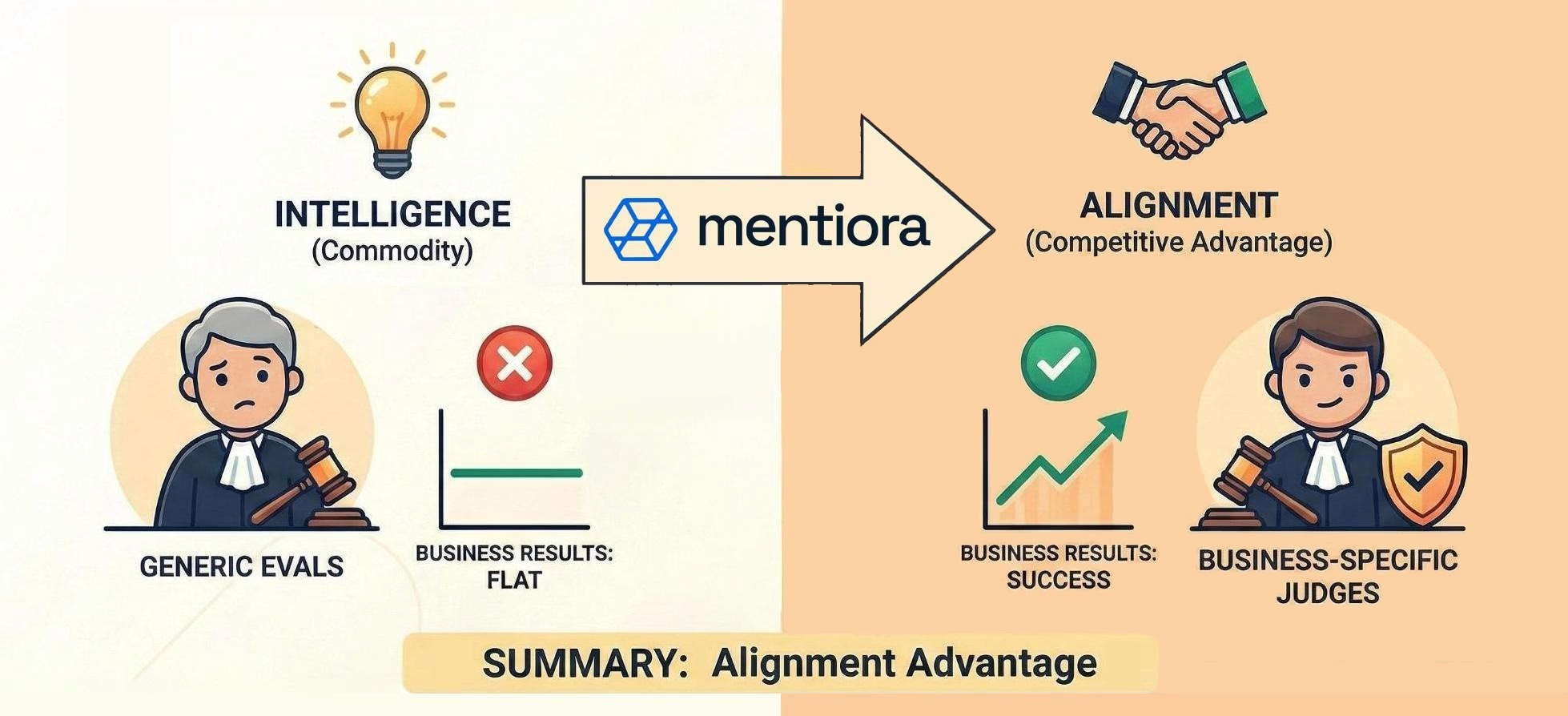

At Mentiora, we see a recurring pattern where companies optimize for Model Intelligence but neglect Business Alignment.

Building an agent is just the first step. The real challenge is teaching that agent the tacit knowledge of your business.

Here is why your agent might be failing, and the methodology required to fix it.

The "Unmanaged Genius" Paradox

The modern LLM is like a brilliant, unmanaged genius. It has encyclopedic knowledge and perfect reasoning. But intelligence without context is a liability.

If you do not explicitly enforce your business logic, the model will default to its general training.

- The Logic: "The customer is unhappy, so I should offer a full refund to resolve the issue."

- The Business Reality: You have a strict no-refund policy for this user tier.

The model did not fail because it lacked intelligence. It failed because it was not aligned with your specific constraints.

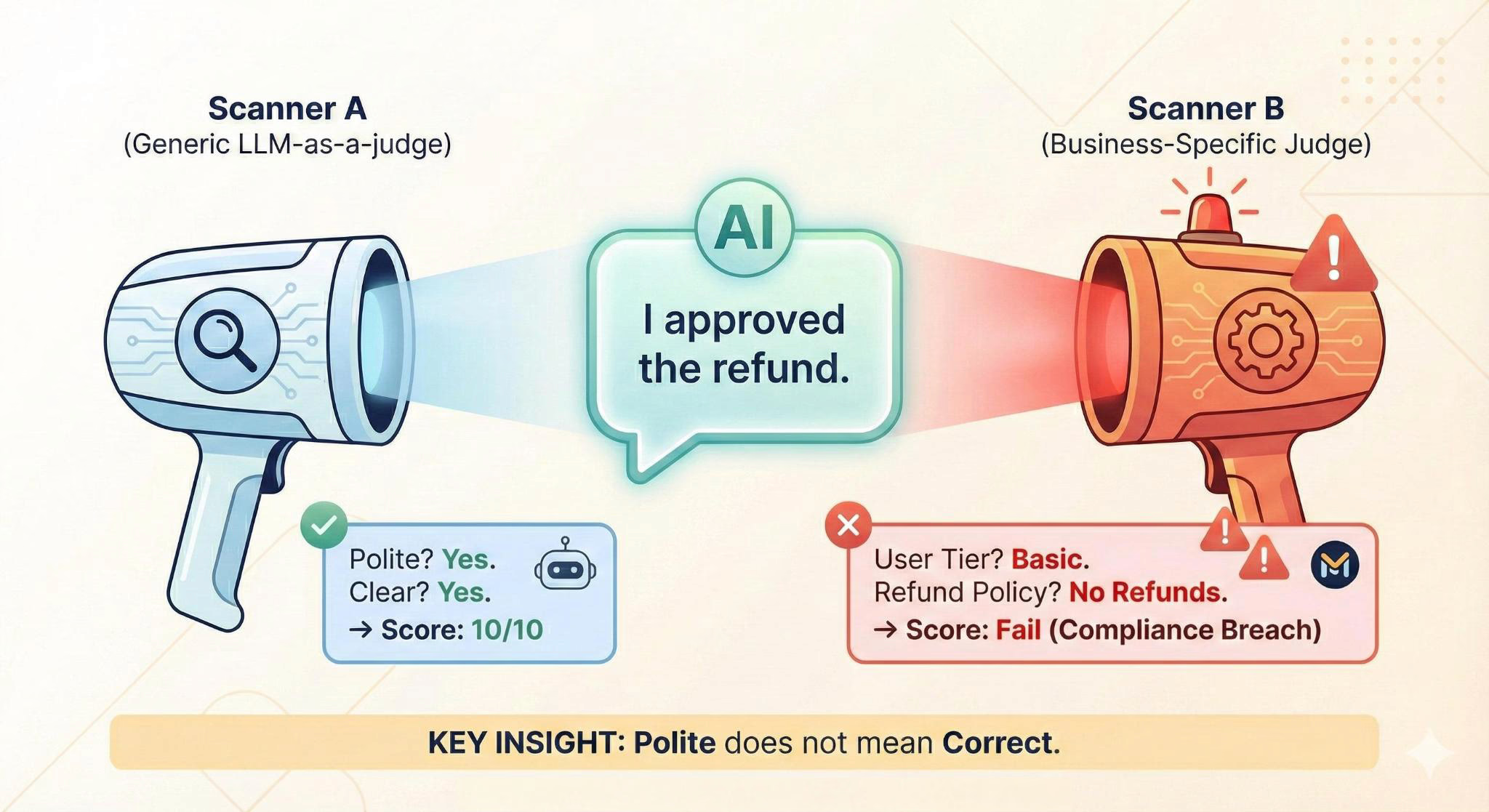

The Problem with Generic Evals

Most teams attempt to catch these issues using standard automated evaluations (generic LLM-as-a-judge). They ask: "Was this answer helpful?"

In enterprise environments, "Helpfulness" is a vanity metric. You need metrics that map to business risks.

- Healthcare: A compassionate answer that misses a mandatory disclaimer is "helpful" but legally catastrophic. The metric must be Medical Boundary Adherence.

- Fintech: A quick answer that skips authentication is a security breach. The metric is Protocol Compliance.

- Support: A polite apology that does not solve the issue drives churn. The metric is Resolution Quality.

If you are using generic evaluations, you are measuring the wrong things. You need Business-Specific Judges.

The Solution: Active Labeling and Specialist Judges

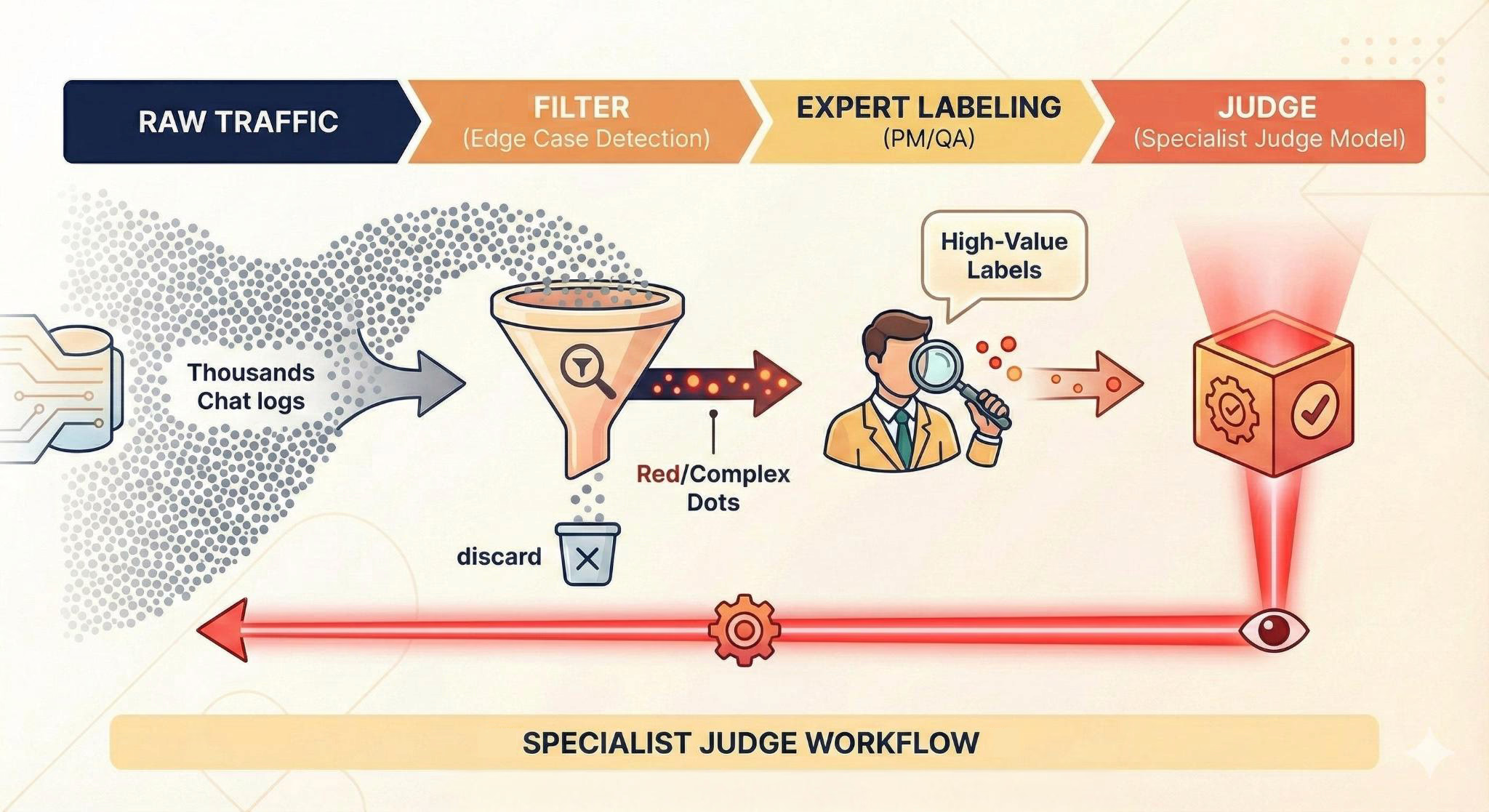

How do you teach a model your specific business nuances without manually reviewing 100,000 logs?

You cannot automate this fully from day one. You need a process to extract knowledge from your experts and codify it.

The Mentiora platform is built on this specific workflow:

1. Stop Random Sampling

Reviewing 1% of random chats is statistically insignificant. You will miss the "Black Swan" failures. You must filter for Edge Cases, such as interactions where the model's confidence was borderline or the topic was sensitive.

2. Product-Led Labeling (The Golden Set)

Do not outsource this to general data labelers.

- Who: Your Lead Product Managers, Policy Owners, and Senior QA Leads.

- What: They label only the complex edge cases.

- Why: You are not just checking grammar. You are extracting tacit business knowledge (the "unwritten rules") from your strategy team.

3. Construct the Specialist Judge

We use these expert labels to construct a specialized Judge.

This is not just a system prompt. It is a rigorous evaluation system that uses your "Golden Set" to grade new conversations with the precision of your best employee.

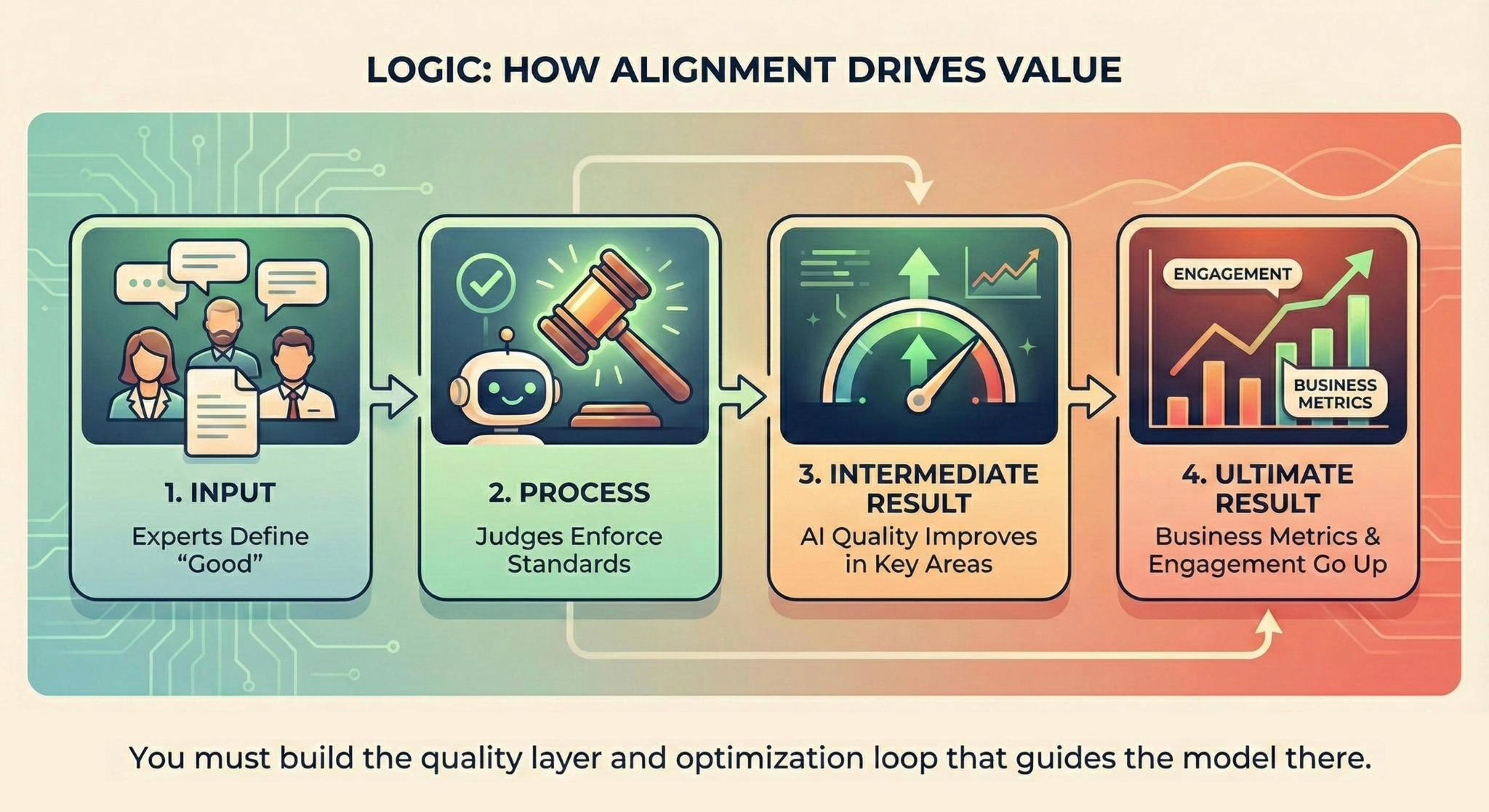

The Logic: How Alignment Drives Value

Why does this process actually move the needle?

Your business experts already know exactly what drives your metrics, whether it is conversions, retention, or trust. But a generic LLM does not know these correlations.

The solution is to ensure that every judge you build, whether for tone, accuracy, or safety, is aligned with what your users actually value.

By injecting expert understanding into your evaluation suite, you force the AI to optimize for your users' needs, which naturally drives business goals.

- Input: Experts define what "Good" looks like for your specific users.

- Process: Your judges enforce these standards across every interaction.

- Intermediate Result: The AI quality improves in the specific areas your users care about.

- Ultimate Result: Engagement and business metrics go up.

You cannot prompt your way to business value directly. You must build the quality layer and optimization loop that guides the model there.

The Real-World Payoff

This is not just theory. We see the impact of this shift daily with Mentiora clients.

When companies replace generic "vibes" with Business-Specific Judges, the operational dynamic changes.

- Trust replaces fear: Teams stop manually checking every chat log because they trust the automated Judge to catch violations.

- Complexity increases: Because the guardrails are precise, our clients feel safe letting the bot handle complex, sensitive workflows that were previously off-limits.

- Metrics improve: We see deflection rates rise and false positives drop. This happens because the bot is finally being judged on the right criteria.

This is the difference between a demo that looks cool and a product that scales.

Summary

If your dashboard is green but your business results are flat, stop debugging the model and start debugging your evaluation criteria.

Intelligence is a commodity. Alignment is the competitive advantage.

Ultimately, success does not depend on which model you use, but on how rigorously you test it.

Don't judge your AI on how smart it is. Judge it on how well it knows your business.